I develop and oversee systems for analysis.

I have a decade of experience working for or collaborating with public sector departments and agencies, primarily in national security and military affairs. I have worked as a researcher, an analyst, and a manager overseeing analysis, training, data products, and data system transformations.

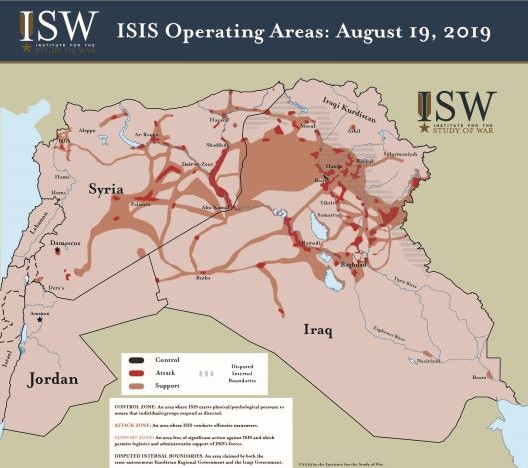

I spent approximately four years working at the Institute for the Study of War, a defense think tank. While at ISW, I authored more than 50 publications, developed new analytics products, and led teams. I contributed to institutional initiatives on military learning, readiness, and the future of conflict.

After ISW, I earned a graduate degree from Carnegie Mellon University where I focused on the application of data science and machine learning to big problems in the public sector. I worked on projects and fellowships for the Air Force's inspection agency, the Federal Reserve's research division, and the Census Bureau's economics directorate.

I am currently a Data Scientist at the Center for Army Analysis.